Overview

HyperComplEx is a hybrid KGE framework that unifies hyperbolic, complex, and Euclidean geometries via relation-specific adaptive attention and a multi-space consistency loss. It models hierarchical, asymmetric, and translational patterns simultaneously, achieving state-of-the-art link prediction while scaling near-linearly from thousands to tens of millions of entities.

Pipeline

1. Embed entities/relations in three subspaces: Hyperbolic (hierarchy), Complex (asymmetry), Euclidean

(translation)

2. Compute subspace scores (Poincaré distance, Hermitian bilinear, translational L2)

3. Learn relation-specific attention α = softmax(W) to combine subspace scores

4. Train with self-adversarial ranking loss + multi-space consistency + L2 regularization

5. Output link scores, learned attention per relation, and final embeddings

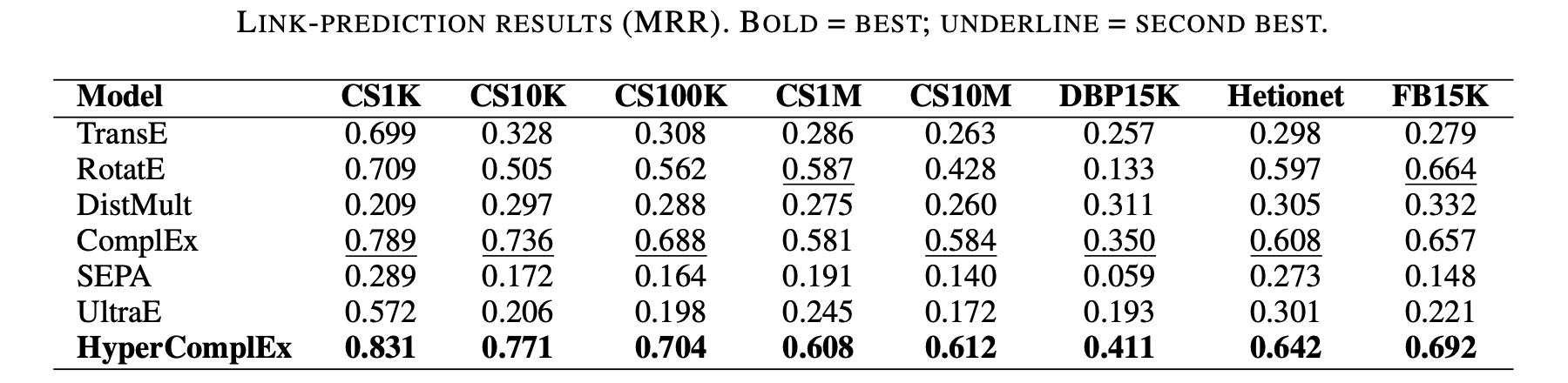

Experiment Results

• Datasets: Our CS scholarly KGs (1K → 10M papers) Family + DBP15K, Hetionet, FB15K benchmarks

• Performance: MRR 0.612 on CS-10M (+4.8% over best baseline); consistent SOTA across scales

• Efficiency: Near-linear training scaling (T ∝ |E|1.06); ~85 ms/triple inference on

CS-10M

• Interpretability: Relation-wise attention aligns with semantics (e.g., hyperbolic for hierarchies,

complex for citations)

Features

• Adaptive multi-space attention per relation type

• Multi-space consistency to coordinate subspaces without collapse

• Scalable design: mixed precision, sharded/cached embeddings, adaptive dimension allocation

Tech. Stack

Python, PyTorch, PyTorch Geometric, PyKeen, NumPy, Pandas, SciPy, scikit-learn, NetworkX, PyYAML, TQDM, CUDA/Metal backends