Overview

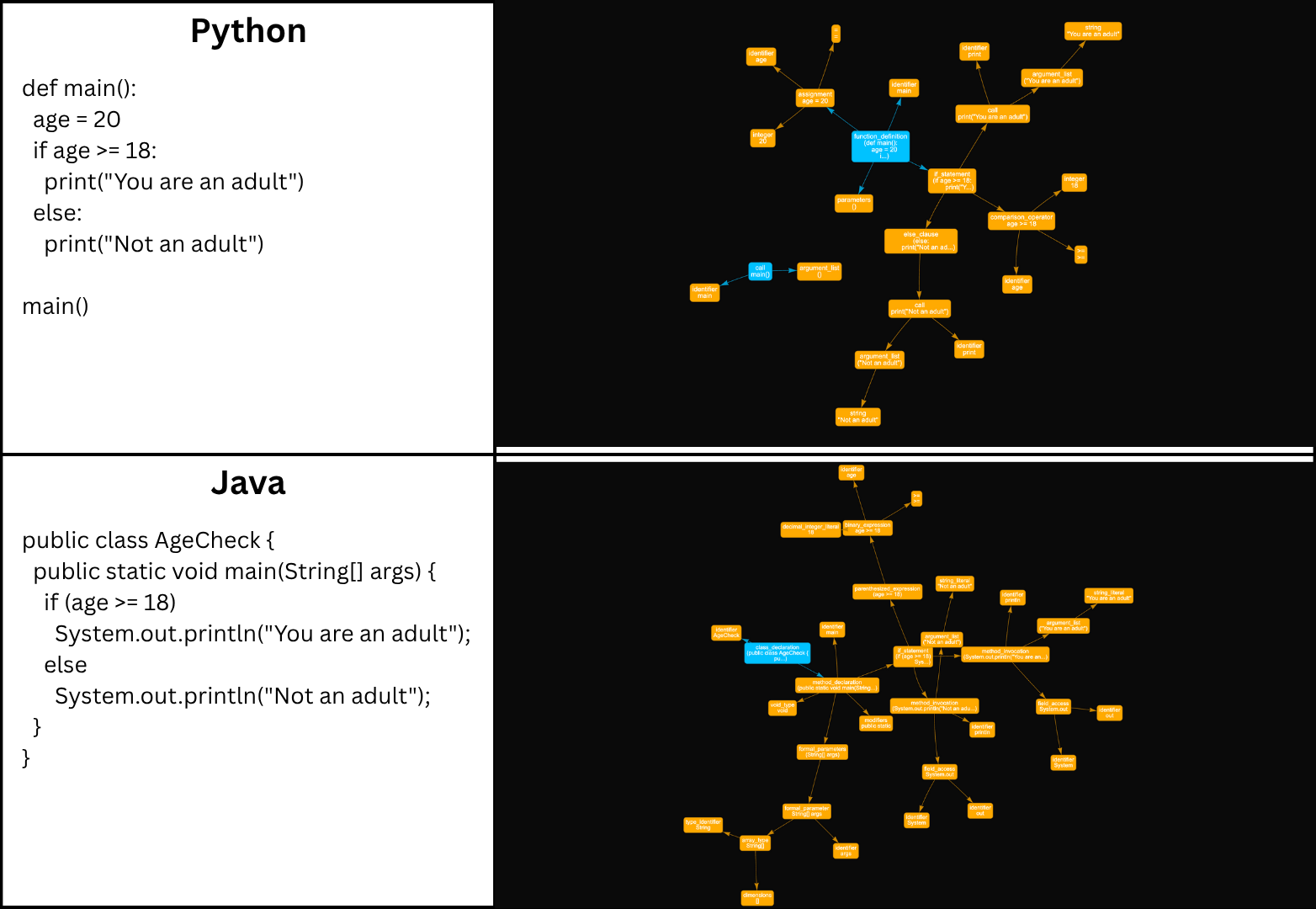

MLCPD is a large-scale, language-agnostic dataset unifying syntactic and structural representations of code across 10 programming languages (C, C++, C#, Go, Java, JavaScript, Python, Ruby, Scala, TypeScript). It provides 7M+ parsed source files normalized under a universal AST schema—enabling consistent cross-language reasoning, structural learning, and multilingual software analysis. Each entry includes a lossless hierarchical JSON AST, language-level metadata, and abstracted node semantics, serialized in Parquet for scalable retrieval.

Pipeline

1. Curate permissively licensed code and normalize text (UTF-8, de-BOM, deduplication)

2. Parse with Tree-sitter grammars for 10 languages and extract full-fidelity ASTs

3. Linearize AST to a flat node array with O(1) traversal; attach metadata & diagnostics

4. Categorize nodes into universal types (declarations, statements, expressions)

5. Build cross-language maps (e.g., function/class) for uniform querying

6. Validate JSON schema and write to Parquet for efficient loading

Dataset & Stats

• Records: 7,021,722 (success rate ≈ 99.99994%)

• Languages: 10 (balanced coverage)

• Disk Storage: ~114 GB Parquets (~5.5× compression)

• Structures captured: complete AST nodes + cross-language schema mappings

• Use cases: cross-language representation learning, vulnerability analysis, explainable code

intelligence

Features

• Universal AST schema for structural homogeneity without losing language nuances

• Lossless node capture (tokens, delimiters, punctuation) + O(1) node addressing

• Cross-language function/class maps for uniform queries and analytics

• Scalable Parquet storage for fast, large-batch training and analysis

Tech. Stack

Python, Tree-sitter, Apache Parquet, Hugging Face Datasets, Pandas, NumPy, PyVis, NetworkX, Matplotlib, Seaborn, TQDM, JSON