Overview

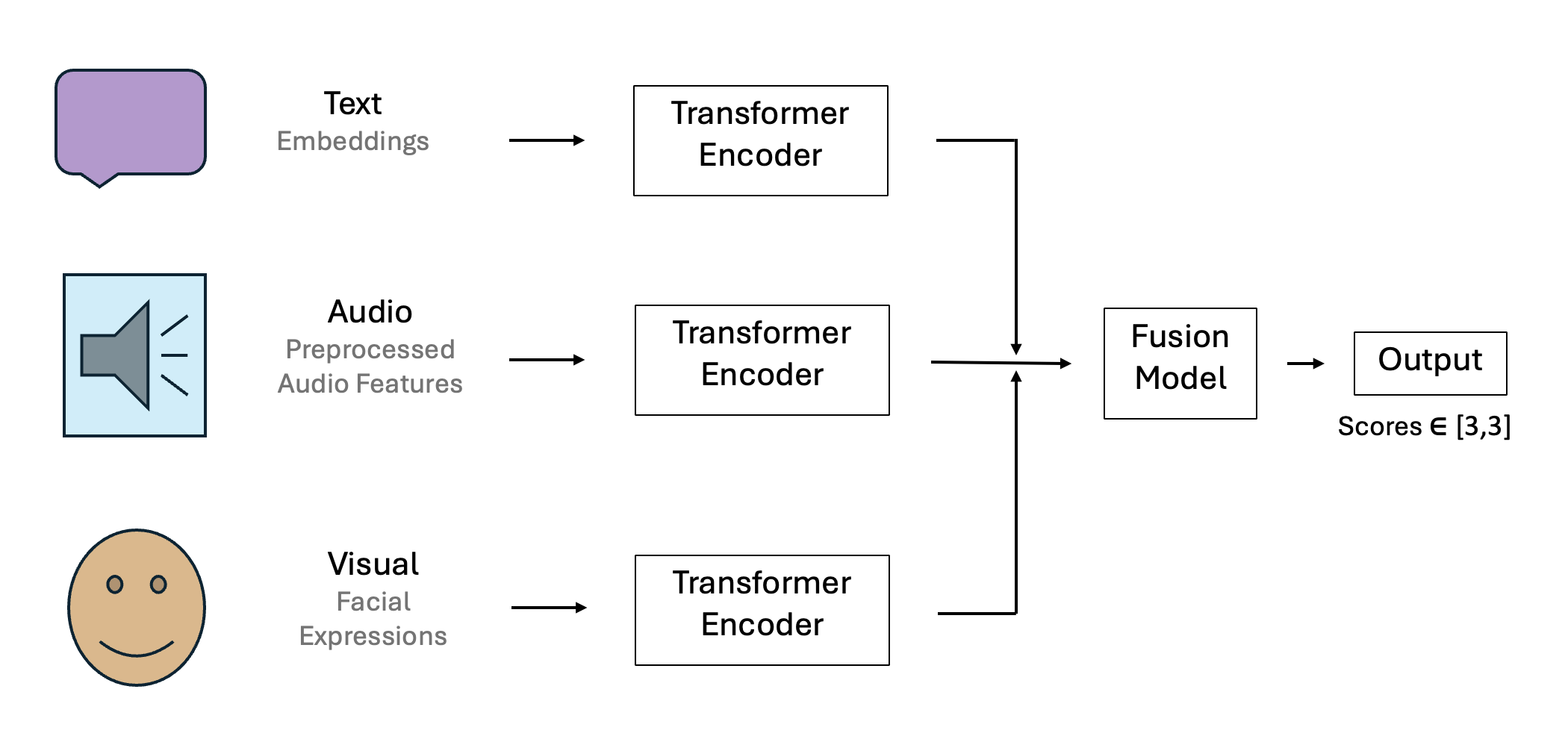

This project tackles multimodal sentiment analysis using the CMU-MOSEI dataset, integrating text, audio, and visual inputs. Each modality is encoded using a pre-trained BERT model, and their embeddings are fused early to jointly model sentiment. This early fusion technique enables better cross-modal interaction understanding.

The model achieves a 97.87% 7-class accuracy and 0.9682 F1-score on the test set. The low MAE of 0.1060 further confirms its precision. Training involved dropout, Adam optimization (lr=1e-4), and early stopping. Evaluation metrics span 7-class and binary sentiment predictions.

Pipeline

1. Process text, audio, and visual inputs using BERT encoders

2. Concatenate modality-specific embeddings using early fusion

3. Pass through a classification head with ReLU, LayerNorm, Dropout

4. Predict 7-class sentiment using softmax

5. Train using cross-entropy loss and monitor validation metrics

Model & Training

Each modality is encoded separately using a BERT-based encoder. Audio and visual sequences are processed and

mean pooled. The model uses early fusion with cross-attention across modalities and a dense classifier. It was

trained for 50 epochs with early stopping at epoch 25. Key configurations include:

• Optimizer: Adam (lr = 1e-4)

• Dropout: 0.3

• Batch size: 32

• 8 transformer layers, 16 heads

• Patience = 10, seed = 42

Experiment Results

• Test 7-class Accuracy: 97.87%

• Test F1 Score: 0.9682

• Test MAE: 0.1060

• Binary Accuracy: 95.44%

• Binary F1 Score: 0.9767

Tech. Stack

Python, PyTorch, Transformers, BERT, CMU-MOSEI SDK, COVAREP, OpenFace, Multimodal Fusion