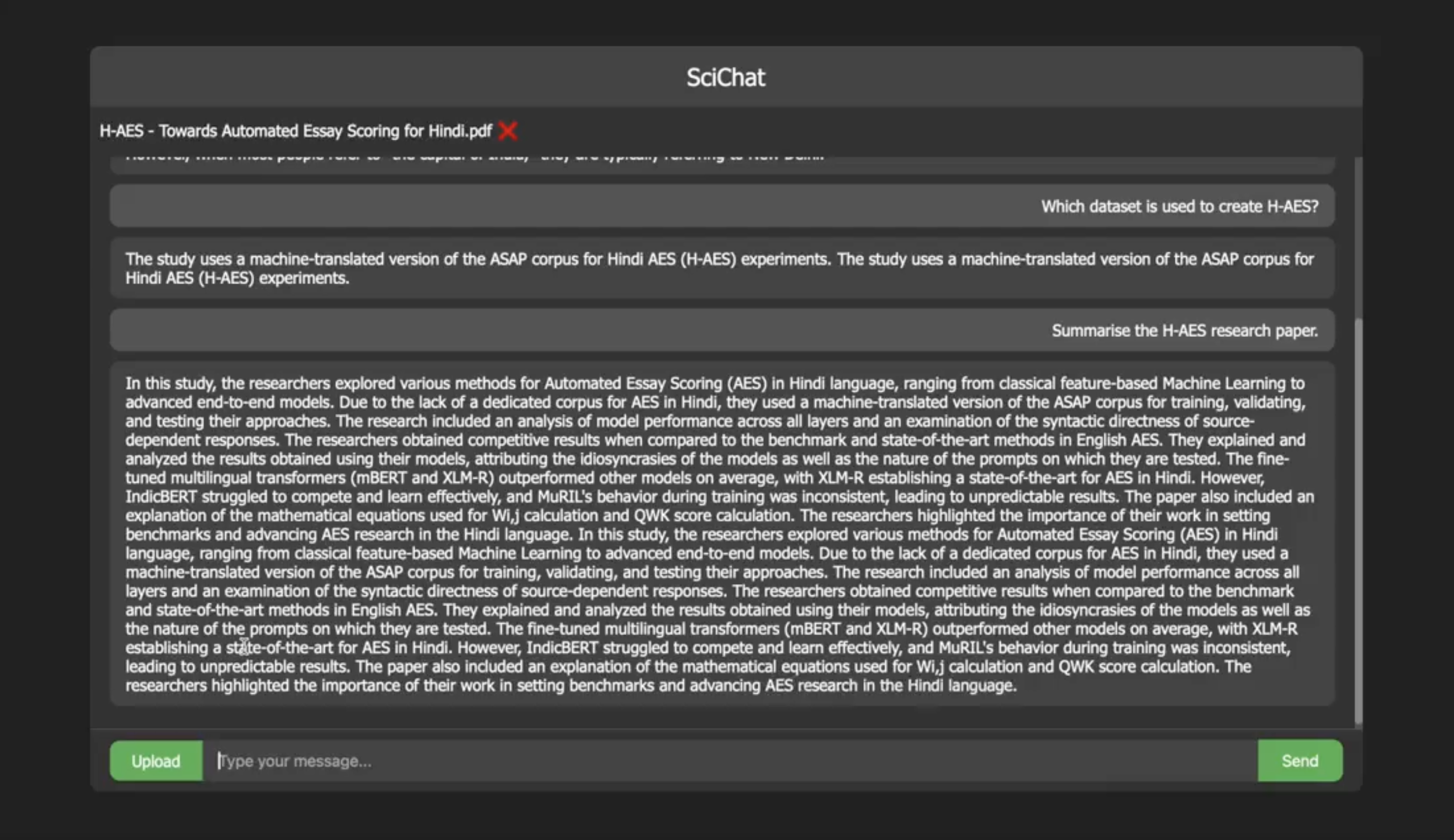

Overview

SciChat is a lightweight yet powerful LLM-driven academic assistant built with Mistral7b and LangChain. It enables users to chat with the model naturally and upload PDFs to retrieve context-aware responses from embedded documents. Designed with researchers and students in mind, SciChat enhances access to scientific insights through an intuitive interface and intelligent query understanding.

Pipeline

1. Upload PDF files through the web interface

2. Parse and split documents using LangChain's PDF loader

3. Embed text using sentence-transformer MiniLM

4. Store and index using Pinecone vector DB

5. Query either the LLM directly (no file) or use RAG pipeline (with files)

Model & Design

The system uses the open-source Mistral7B:instruct model through Ollama for inference. When no files are uploaded, direct LLM prompting is used. When PDFs are uploaded, it leverages a Retrieval-Augmented Generation (RAG) flow powered by LangChain, Pinecone, and HuggingFace embeddings to enable precise context-based answers. The app runs on Flask with minimal hardware requirements and is optimized for quick bootstrapping.

Features

• Natural language chat with or without documents

• PDF support for uploading, indexing, and retrieval

• Efficient vector search via Pinecone

• Lightweight deployment using Flask backend

• Reusable embedding cache with update detection

Tech. Stack

Python, Flask, Ollama, Mistral7b, LangChain, Pinecone, sentence-transformers, HTML/CSS/JS